Background#

AI without a doubt is the hottest news topic these days. The hype mostly comes from the corporations poised to make trillions off its success though, but I would disingenuous to discredit the innovations that AI has and will bring about like decreasing the time from initial scan to diagnosis in the medical field. However, if you read the title, this is not what this post is about.

AI models require a lot of data to train, as a result, the conclusion AI companies came to was to indiscriminately scrap the entire internet. Obviously this came with a whole host of problems and it especially angered publishers. I am not going to comment on the morality of indiscriminately scraping the public web and its fair use, at least for this post. But given that the NYT has ongoing lawsuits regarding the unauthorized use of their copyrighted content, they should have strong scraping protections (foreshadowing). So as an amateur web scraper developer, I wanted to see how difficult it would be to scrape the NYT.

Investigation#

Let's first look at the robots.txt for some clues.

# Sitemaps

Sitemap: https://www.nytimes.com/sitemaps/new/news.xml.gz

Sitemap: https://www.nytimes.com/sitemaps/new/sitemap.xml.gz

Hmm, the only item of interest is the sitemap. Lets switch gears and look at an article and its potential bot protection. For those following along at home:

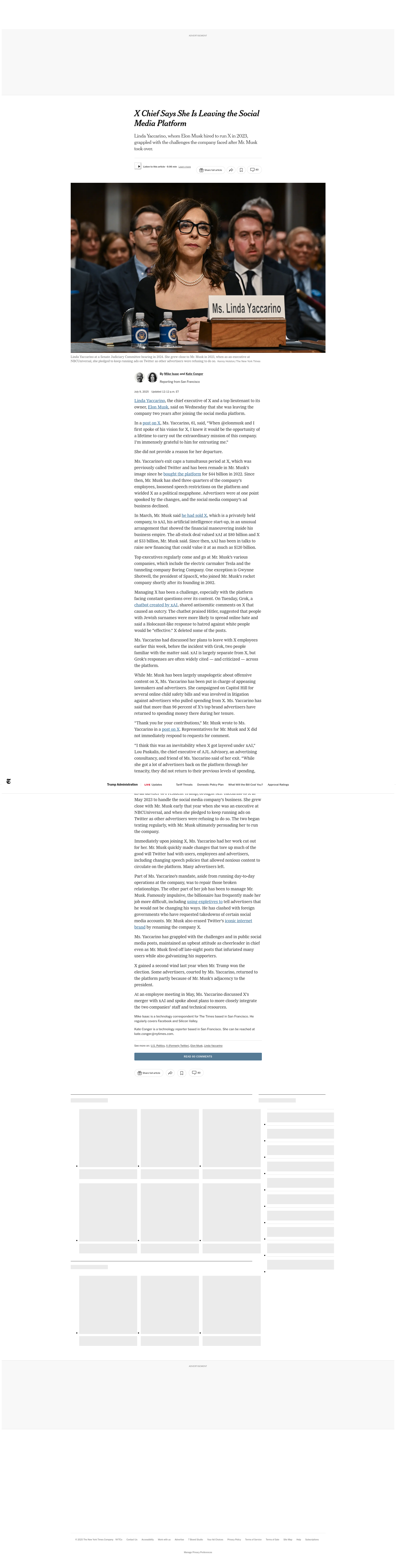

curl "https://www.nytimes.com/2025/07/09/technology/linda-yaccarino-x-steps-down.html"

The plot thickens...

<script>

(function(){

if (document.cookie.indexOf('NYT-S') === -1) {

var iframe = document.createElement('iframe');

iframe.height = 0;

iframe.width = 0;

iframe.style.display = 'none';

iframe.style.visibility = 'hidden';

iframe.src = 'https://myaccount.nytimes.com/auth/prefetch-assets';

document.body.appendChild(iframe);

}

})();

</script>

What if we pass a value for that NYT-S cookie...

curl "https://www.nytimes.com/2025/07/09/technology/linda-yaccarino-x-steps-down.html" -b "NYT-S=enroneurope"

Huh, it really was that easy.

Code#

Here is a quick crawler, I whipped up:

// main.ts

import { CheerioCrawler, Sitemap, ProxyConfiguration } from 'crawlee';

import { router } from './routes.js';

const MAX_CONCURRENCY = 1;

const MAX_REQUESTS_PER_MINUTE = 500;

const MAX_REQUEST_RETRIES = 10;

const curlHeaders = {

'Accept': '*/*',

'User-Agent': 'curl/8.14.1', // Important

};

const crawler = new CheerioCrawler({

// proxyConfiguration: new ProxyConfiguration({ proxyUrls: ['...'] }),

requestHandler: router,

// maxRequestsPerCrawl: 10,

maxConcurrency: MAX_CONCURRENCY,

maxRequestsPerMinute: MAX_REQUESTS_PER_MINUTE,

maxRequestRetries: MAX_REQUEST_RETRIES,

preNavigationHooks: [

(crawlingContext, gotOptions) => {

gotOptions.headers = {

...gotOptions.headers,

...curlHeaders,

Cookie: 'NYT-S=enroneurope;',

};

}

],

async failedRequestHandler({ request, log, error }) {

log.error(`Request ${request.url} failed too many times.`, { error });

},

});

const { urls } = await Sitemap.load('https://www.nytimes.com/sitemaps/new/sitemap-2025-07.xml.gz');

await crawler.addRequests(urls);

await crawler.run();

// router.ts

import { createCheerioRouter } from 'crawlee';

import { parseHTML } from 'linkedom';

import { Readability } from '@mozilla/readability';

import DOMPurify from 'dompurify';

export const router = createCheerioRouter();

const { window } = parseHTML('<html></html>');

const dompurify = DOMPurify(window);

router.addDefaultHandler(async ({ request, body, log, pushData }) => {

const { document } = parseHTML(body);

const reader = new Readability(document);

const article = reader.parse();

if (article) {

log.info(`${article.title}`, { url: request.loadedUrl });

const clean = dompurify.sanitize(article.content);

await pushData({

url: request.loadedUrl,

title: article.title,

byline: article.byline,

content_html: clean,

content_text: article.textContent,

length: article.length,

});

} else {

log.info('Readability.js could not parse article', { url: request.loadedUrl });

await pushData({

url: request.loadedUrl,

title: 'N/A',

byline: 'N/A',

content_html: 'N/A',

content_text: 'N/A',

length: 'N/A',

});

}

});

Some things to note:

- Using the same headers as curl is very important

- A higher concurrency and rate limit can be used but the NYT may start blocking the scraper

- linkedom was used instead of JSDOM to more robustly handle improper CSS classes